Side Quests

Here are some fun side quests I've been working on, primarily to sharpen my skills using the latest AI tools to turn thoughts into things.

—

Karate Pose Analysis using open-source pose detection and computer vision

As a karateka, I am always polishing my technique, especially with regard to katas (sequences of blocking and attacking moves, of which there are nineteen in Ueshiro Shorin-ryu, the system in which I train.) In addition to constant physical practice of the katas, it is also important to observe advanced karateka performing them, as well as recording video of oneself to audit our own technique.

A year ago, if I wanted to create this, it would have been out of reach for me as my coding skills are limited. But now with Claude and Cursor, I feel like I have new superpowers to turn my ideas into working prototypes without having to beg my engineer pals to write the code for me. 🤯

(It's currently in a very rough, MVP state, and I have not spent time polishing the UI as I've been focusing first on getting the technical flow to work. To great success!)

Here is the simple upload screen (I know, it's ugly, but it works!)

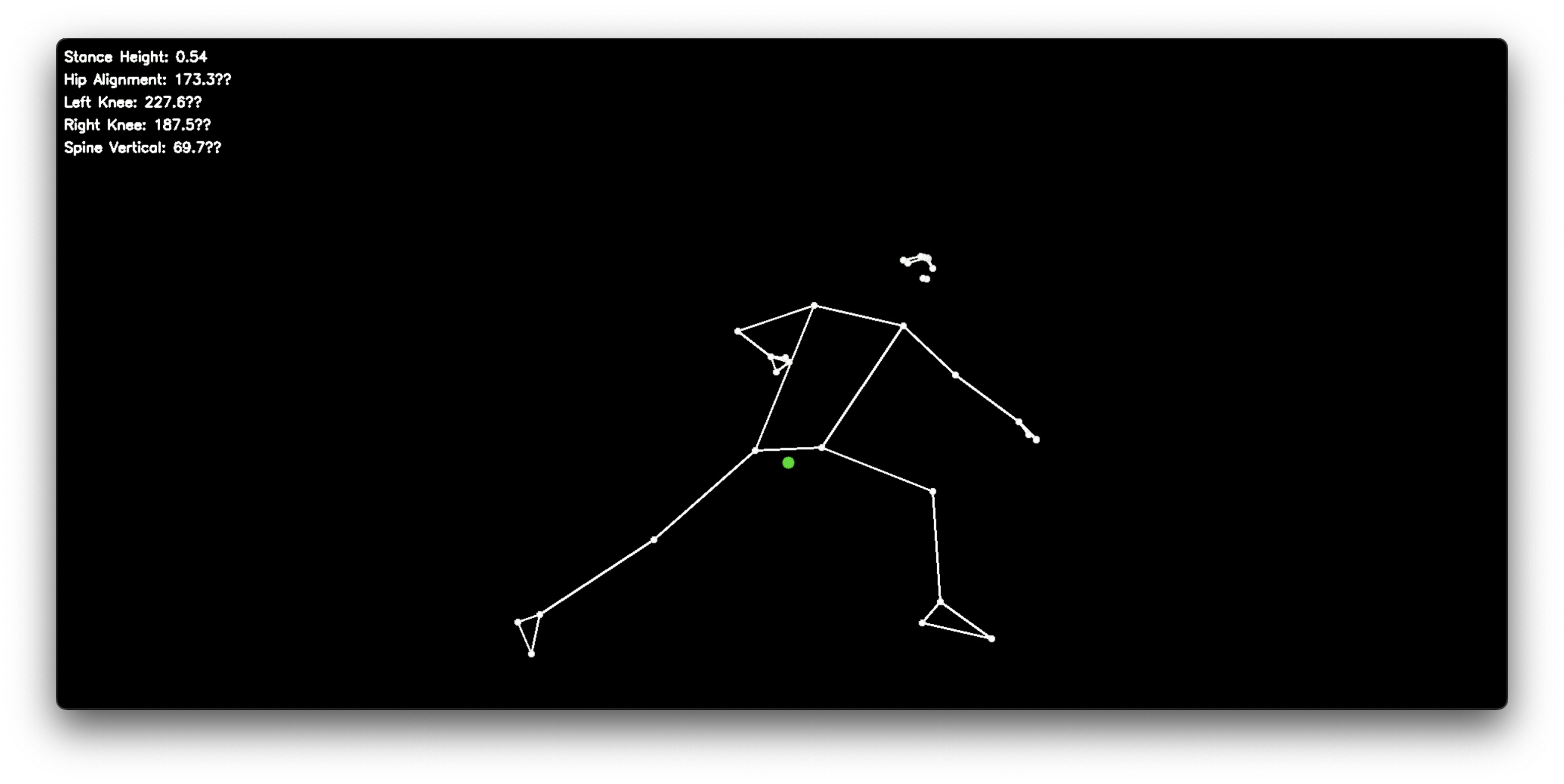

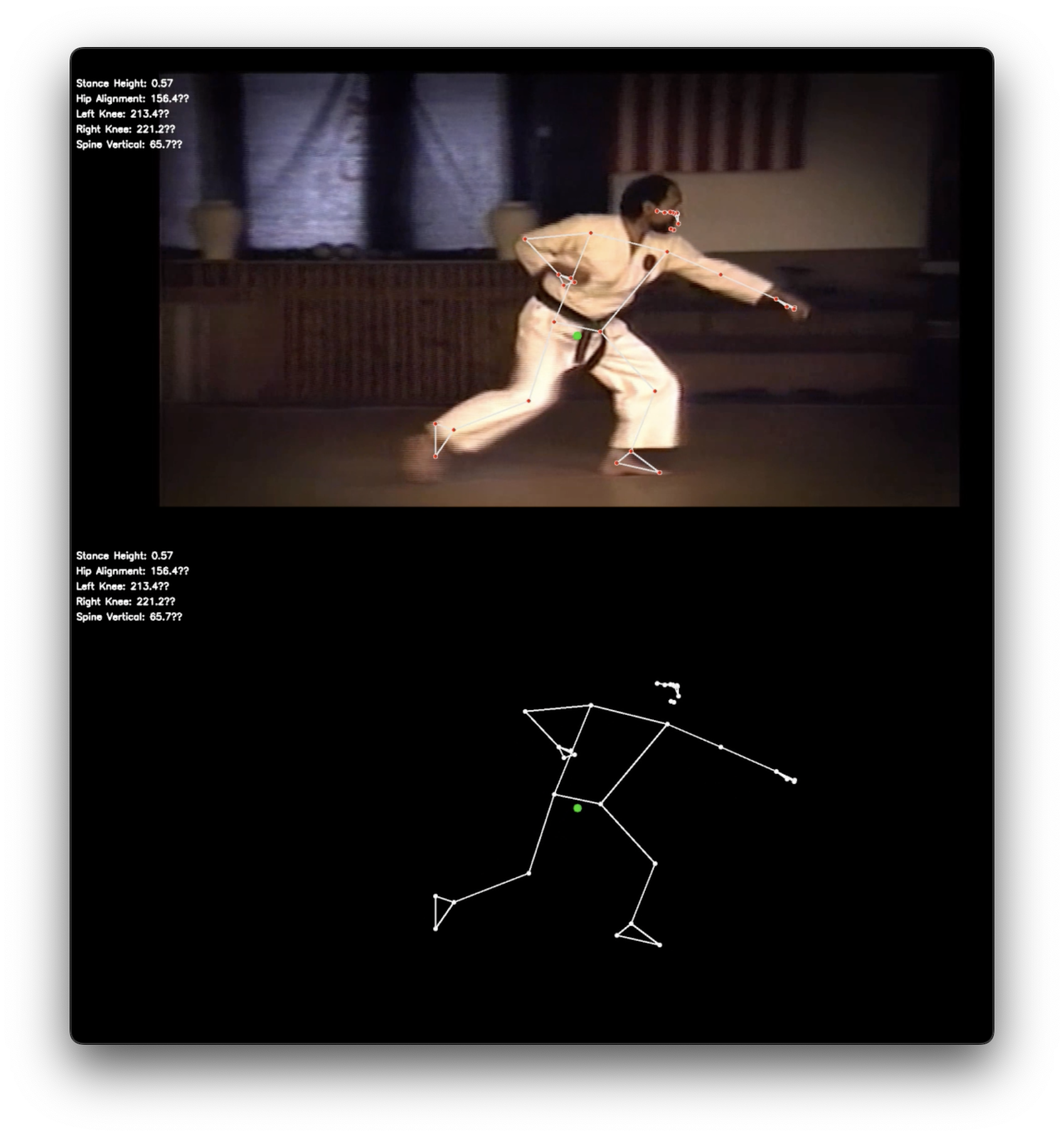

Here is a still from the output showing the original video with the pose detection skeleton overlay, and below that, only the skeleton on a black background.

I used Cursor with Claude 3.5 Sonnet built-in to help me build a simple web app to:

- Create an interface to handle the Upload of an .mp4 video of a karate kata performance

- Show processing progress with a progress bar

- Handle error conditions

- Conduct a frame-by-frame analysis, using the MediaPipe Pose and OpenCV libraries to estimate and detect poses, and overlay a skeleton showing the key joint positions, as well as markers for the eyes and mouth.

- Add a green dot to indicate the karateka's center of gravity, positioned between and slightly below the hip markers to represent the "tanden."

- I asked Claude to help me introduce temporal smoothing for the pose detection, since the source video is blurry with compression artifacts, and it worked like a charm. (there is still some amount of jitter if you look at the markers for hands and feet, but I can live with them for now)

- Export the processed videos as two separate videos for study, dropped into the Downloads folder, in a newly created folder using the file name of the uploaded video as its name, with the date and time appended:

- VIDEO 1: The base video, with the skeleton overlaid and composited, with a 10% black layer to enhance visibility of the skeleton.

- VIDEO 2: Only the skeleton showing the positions of the joints, limbs and face, on a black background

And now, I've got my own app that I can feed any new videos. All in about an hour of effort to get this assembled. CRAZY.

Technology used for this project:

Want to run it yourself?

Here's the project on Github

https://github.com/aud10pilot/kata-analysis